The other day, a co-worker, aware of my obsession with audiophile grade equipment, asked if I could recommend a good pair of portable headphones. He was considering the new Bose QuietComfort Wireless 35, which uses Bluetooth and was curious on the quality of sound at a $350 (USD) price point. I immediately went on a whole rant about codecs, DACs, wireless audio (specifically over Bluetooth), and then tried to find and send him a link to some article describing how it all works. I was surprised to find barely any basic write-ups on the technology behind the above topics and how each node in this chain impacts the audio quality, so here we are, kids, my attempt to break it down for you.

Let’s get right down to it, and apologies in advance if I try to speak to you like you’re five. That’s just my way to make sure that we’re all on the same page. First, there is the original recording – so if it’s already crap (as in, your friend sent you a guitar melody he captured with his iPhone), you’re already in the gutter in terms of quality. But, in most cases, I’m assuming you’re about to play back a mastered studio album from your favorite producer, and I’m guessing, with a high degree of certainty, that you will be playing back some digital files (this assumption, by the way, includes a CD). Somehow the music is already stored there in those bits and bytes, and I will conveniently skip the part on how that happens, since you’ve had no choice on that “behind-the-scenes” process anyway. You either downloaded or ripped the CD yourself, and, usually, have that digital data stored on your [portable] playback device in some “file”. So far so good?

Digital Audio

Unless you’re dabbling with analog audio, like a record pressed on vinyl or magnetic tape playing on a reel-to-reel, you’re dealing with a digital representation of an analog original. It’s just an approximation of it, if you will, since we have to capture its truly unlimited essence with numbers. It may sound like the real thing, but it’s not – it’s just a copy.

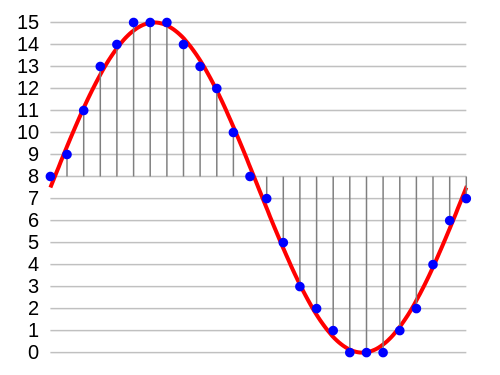

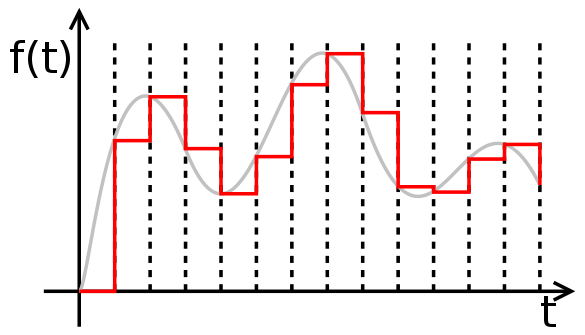

The digital data which represents the audio is stored in an audio encoded format – this is also referred to as a “codec” (like WAV, MP3, FLAC, etc.). These codecs are used to encode and sometimes compress the stored digital audio even further, mainly for the purpose of saving disk space. This way you can carry more music with you and also stream it over your WiFi and cellular signal. An audio Compact Disc (CD) stores audio in an uncompressed PCM format (so does a WAV and AIFF files, by the way), with the digital representation of an analog waveform sampled at 44,100 times a second (44.1kHz), using a 16-bit integer to represent the final sample value of the amplitude (loudness) of the original analog signal.

Pulse-Code Modulation (PCM)

Picture a sound waveform (or use the image above), and imagine measuring the height of that curved line (that’s the amplitude or “volume” of the signal), at very short time intervals (that’s the sample frequency – we’re measuring it 44,100 times per second). That “16-bit” part represents the resolution of the encoded binary number – we’re using 16 bits (‘1’s and ‘0’s) for each sampled value. With 8 bits you can store 256 different levels of sampling resolution (that’s 2 to the 8th power), and with 16 bits, 65536 different levels (2^16). From the example image above (which actually uses 4 bits to store only 16 numbers), we will be storing 8, 9, 11, 13, 14, 15, 15, 15. etc…

OK, are you still with me, or did I already lose you? My point here is that our ‘music’ is digital, and yes, it’s already missing data! This is because we don’t know all of the values in between those blue dots. Based on the Nyquist-Shannon theorem, sampling data at twice the maximum frequency we wish to capture, should be good enough. Since the human upper hearing range is at 20,000 Hz, any rate of sampling greater than 40,000 times a second is ‘theoretically’ OK. And that assumption is a whole other rant I can leave for later, but for now let’s keep going.

“Lossless” vs. “Lossy” Codec

Any “lossless” audio codec, such as FLAC or Apple’s Lossless Audio, is able to use another algorithm to compress the already stored digital audio even further (by as much as 50-60%) without losing any data. I am purposely avoiding the details here, but let’s just say that it works sort of like a compression for a ZIP file, and when you decompress it you can get the original back without any missing data. Again, the whole point behind this compression algorithm is just to save you some space.

A “lossy” format, such as MP3, AAC or Ogg Vorbis, will down-sample (re-sample again) the original signal even further! It does this by throwing away what it thinks is “useless” data (sometimes sounds above certain high and below certain low frequency ranges), or sounds masked by other sounds, that a human ear can not hear. But because of that, you will never be able to get the original recorded data back – it is gone forever! That’s why it’s “lossy” – the data is lost! But… to most people that should still “sound” just fine.

Another thing to consider here is that even MP3s and other lossy formats can have their own levels of compression, usually determined by the encoding bit rate. The latter refers to the number of bits (and eight bits is one byte) per unit of time (in this case, a second) required to store and playback the audio in an interrupted matter – this is again used for size and streaming music over lower bandwidth networks. The higher the bit rate, the more data is stored to represent the audio signal, the higher the size of the original digital file. If you encode all of your MP3s at 320Kbps (kilobits per second), that should still sound pretty good (but never as good as an original or a lossless encoding). As an example note that most web radios such as Pandora, Rdio, and Rhapsody, will stream music at 192Kbps. Apple’s Beats, Google Play and Spotify will stream at 320Kbps (that’s good!). While streaming via your browser from Soundcloud, Mixcloud or Bandcamp is only at 128Kpbs (the lowest and the worst).

DACs

OK. So now we have this data (stored in a digital file, compressed using an audio codec) and we’ll need to revert it back to an analog signal. Why? Because we need to recreate that acoustic pressure with air vibrations, which will hit our human ear, and then perceived and interpreted by our brain as “sound”. With this being its own complex subject, let’s just say that your typical headphones do just that: use a moving coil, that responds to an alternating magnetic field, which moves the air back and forth with its diaphragm. The current (of that signal) that produces this magnetic field is “analog”, so in order to produce this analog signal from its digital data, we need a Digital to Analog Converter, known simply as a DAC (or, in the most lamest terms, a “sound card”). OK?

So, once more, to summarize really quick (skipping all of the cables in between): original audio recording, sampled, stored in a file, compressed even further, read by some device, converted by a DAC from digital to analog signal, sent to your headphones, then into your ear, maybe your brain…

This DAC is very, very important, as it is the sole interpreter of what that analog sound wave should be like, based on its digital input data. The numbers we stored to represent the original signal are nearly good enough to accurately recreate and interpolate the missing data points to produce a smoothly varying signal. The DAC in your iPhone is cheap and crappy! That’s why gearheads like me, spend more money on getting that part just right! Same file converted by different DACs will sound different – never-mind how expensive your headphones are. Yes, the cables, amps and headphones matter too, but playing an MP3 off of your iPhone is already a lost cause! Playing a lossless file through a terrible DAC is just as bad!

Wireless and Bluetooth

Alright, so we’ve got ourselves some digital audio, and we’ve got those wireless headphones (or a portable speaker) and now we need to send something to it in order to play music. What exactly are we sending and how is it getting there? Well, we can transmit analog signal using frequency modulation (FM) over radio waves, but we know that doesn’t sound all too great. So instead we’ll use a wireless technology called Bluetooth, to transmit data over short-wavelength radio waves from your sender to receiver.

Without going into technical details behind the implementation (the radio technology is actually called frequency-hopping spread spectrum), the main point here is that we are transmitting digital data here. That means that “something” still needs to convert that digital signal to an analog one. That something is, you guessed it, a DAC, built inside the receiving device. That means that your Bluetooth headphones must have a DAC inside of them! Remember my whole spiel on paying attention to a good DAC? Well, that choice has now been eliminated for you (not a good thing!).

It actually gets a bit worse. There is a limit to the number of digital bits we can send over this wireless protocol (Bluetooth 1.0 was about 1Mbps and 2.0 can manage up to 3Mbps). That limit to the bandwidth dictates just how much (and how fast) data we can transmit. Great for compressed lossy codecs – not so good for lossless audio. What’s even worse here is that in order to ensure that the data can be transmitted within allowed bandwidth in “real-time” (that is, stream without buffering, etc.), Bluetooth sender will re-sample, re-compress, and re-encode the audio once again! There’s also the latest rage on the aptX codec which claims to improve the transmission of sound via Bluetooth. The thing to keep in mind here is that aptX is still a codec – a proprietary audio compression algorithm – meaning that either way you slice it (unless it’s an aptX Lossless codec), you are still compressing and losing more data along the way.

Don’t get me wrong – Bluetooth is a fantastic technology for digital data transmission, which has built-in auto-discovery, error-correction, security, and all of the wireless networking features. But it’s just not good for audiophile grade sound, as it degrades the original signal even further, in order to recreate it on the other end. If you use, AirPlay for example, DLNA, or other WiFi based technologies, you’re transmitting the original digital audio data (including FLAC encoded files) over the existing network, and hopefully to a better DAC then the one built-in. For example, in my living room, I have a Sonos Connect, which streams my lossless music from my network attached storage (NAS), with its coax digital output connected to my Cambridge Azur 851D DAC, then an amplifier, and only then speakers.

OK… Whew! Did I miss something? Misrepresent a concept? Went too deep (or too narrow) on a particular topic? Should I cover more? Leave me your questions, comments, and most importantly all of your hate mail below!

Words by HC